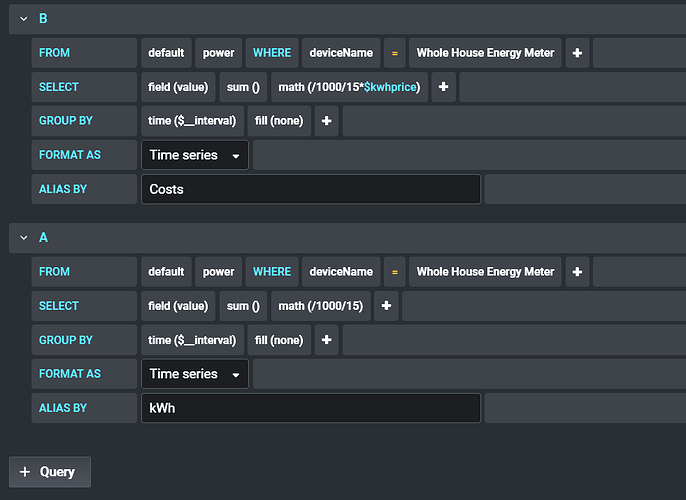

Like this: The math may change based on your data. but you have to get it into kwh

Here's the JSON for 7 day.

Code

{

"aliasColors": {

"Costs": "#7eb26d",

"kWh": "#eab839"

},

"bars": true,

"dashLength": 10,

"dashes": false,

"datasource": "$powerdatasource",

"decimals": 2,

"editable": true,

"error": false,

"fieldConfig": {

"defaults": {

"custom": {},

"links":

},

"overrides":

},

"fill": 1,

"fillGradient": 0,

"grid": {},

"gridPos": {

"h": 8,

"w": 9,

"x": 0,

"y": 11

},

"hiddenSeries": false,

"hideTimeOverride": false,

"id": 31,

"interval": "1d",

"legend": {

"alignAsTable": true,

"avg": true,

"current": true,

"hideEmpty": false,

"hideZero": false,

"max": true,

"min": false,

"rightSide": false,

"show": true,

"total": true,

"values": true

},

"lines": false,

"linewidth": 2,

"links": ,

"nullPointMode": "connected",

"paceLength": 10,

"percentage": false,

"pointradius": 5,

"points": false,

"renderer": "flot",

"seriesOverrides": [

{

"$$hashKey": "object:6028",

"alias": "Costs",

"yaxis": 2

}

],

"spaceLength": 10,

"span": 12,

"stack": false,

"steppedLine": false,

"targets": [

{

"alias": "Costs",

"dsType": "influxdb",

"groupBy": [

{

"params": [

"$__interval"

],

"type": "time"

},

{

"params": [

"none"

],

"type": "fill"

}

],

"hide": false,

"measurement": "power",

"orderByTime": "ASC",

"policy": "default",

"query": "SELECT sum("value") 0.22/1000 FROM "power" WHERE "host" = 'mainpower' AND $timeFilter GROUP BY time(1d) fill(null)",

"refId": "B",

"resultFormat": "time_series",

"select": [

[

{

"params": [

"value"

],

"type": "field"

},

{

"params": [],

"type": "sum"

},

{

"params": [

"/1000/15$kwhprice"

],

"type": "math"

}

]

],

"tags": [

{

"key": "deviceName",

"operator": "=~",

"value": "/^$Device$/"

}

]

},

{

"alias": "kWh",

"dsType": "influxdb",

"groupBy": [

{

"params": [

"$__interval"

],

"type": "time"

},

{

"params": [

"none"

],

"type": "fill"

}

],

"hide": false,

"measurement": "power",

"orderByTime": "ASC",

"policy": "default",

"query": "SELECT sum("value") FROM "power" WHERE "host" = 'mainpower' AND $timeFilter GROUP BY time(1d) fill(null)",

"refId": "A",

"resultFormat": "time_series",

"select": [

[

{

"params": [

"value"

],

"type": "field"

},

{

"params": ,

"type": "sum"

},

{

"params": [

"/1000/15"

],

"type": "math"

}

]

],

"tags": [

{

"key": "deviceName",

"operator": "=~",

"value": "/^$Device$/"

}

]

}

],

"thresholds": ,

"timeFrom": "7d",

"timeRegions": ,

"timeShift": null,

"title": "Last 7 Day Power Consumption/Costs",

"tooltip": {

"msResolution": false,

"shared": true,

"sort": 0,

"value_type": "cumulative"

},

"type": "graph",

"xaxis": {

"buckets": null,

"mode": "time",

"name": null,

"show": true,

"values":

},

"yaxes": [

{

"$$hashKey": "object:8675",

"decimals": 1,

"format": "kwatth",

"label": "Usage",

"logBase": 1,

"max": "200",

"min": null,

"show": true

},

{

"$$hashKey": "object:8676",

"decimals": 2,

"format": "currencyUSD",

"label": "Cost",

"logBase": 1,

"max": null,

"min": "0",

"show": true

}

],

"yaxis": {

"align": true,

"alignLevel": null

},

"pluginVersion": "7.3.1"

}

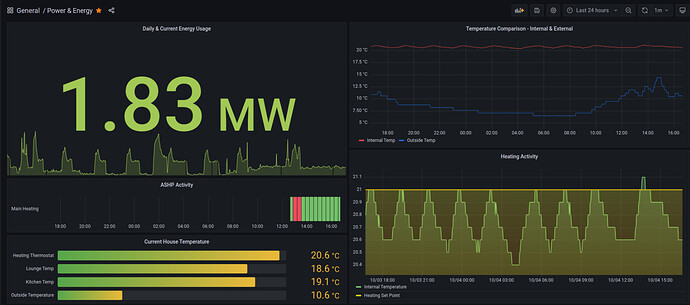

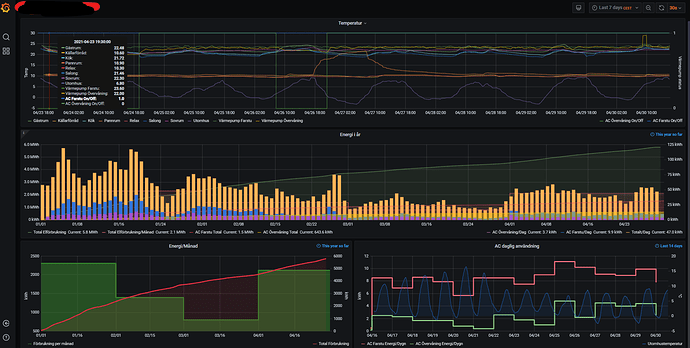

to attempt to map energy usage against my heat pump. I've read about flash drive damage with lots of writes - should I be worried?

to attempt to map energy usage against my heat pump. I've read about flash drive damage with lots of writes - should I be worried?