Part of the reason I finally started to look at asynchronous HTTP calls in my drivers. Still a couple left to do....

FWIW:

- Ecobee Suite uses Asynchronous HTTP extensively - almost everywhere (except for a few command calls that don’t require waiting for a response).

- If CPU resources get scarce, ALL applications will get less CPU time, and thus run slower. This doesn’t mean the app is the cause of the issue - it quite possibly could be a victim of some other issue.

- Reports of issues regarding CPU usage in ES are few and far between - literally thousands of installations running

- Running poll cycles of 1 minute has repeatedly proven to improve the situation (because there is less for ES to do during each cycle). Hopefully switching from 2 minutes to 1 minute will improve things for you…

- Make sure that ALL of your devices are set to use short state/event history - a value of 11 for both is recommended.

- Check the size of the DB on your hub - I have 260+ devices and my DB runs about 30-40MB (thanks to optimizations by the Hubitat team over the last 6 months - mine used to be over 140MB daily).

If all else fails, try pausing all your Helpers for a while and see if things work better. Or disable/remove ES altogether.

Remember, running ES is a choice, not a requirement. Feel free to uninstall it and use something else if you aren’t realizing sufficient benefits.

@sburke781 I have been getting sporadic severe load warning since I started using Hubitat in august. I first noticed it after I began using the IFTTT plug-in. I use IFTTT only to keep a couple contact closures in sync, but that may have been a false flag as I got one with IFTTT paused.

When I moved to .121, I experienced daily lockups which resulted in an unreachable hub even by the diagnostic tool. This resulted in having to pull the plug. On .123 I ran stable for days but adding devices resulted in the 500 error bug and I upgraded to .124. I still have not experienced a lockup or a severe CPU load message on this build.

To @thebearmay point, the load of 1 has Never triggered a hub warning, it seems like a load of 2.5 is where that triggers for me.

However, this did kickoff some rather obsessive monitoring of hub stats for me. The story is always the same. I can run for a couple days at hub loads of 0.00 to 0.15 or so. Then, without warning, the hub load will increase 25 fold within a ten minute span to around 1.00. Also a dramatic increase in hub operating temp from 35C average to about 47C average. From there, no amount of pausing devices that make calls bring the load down. It will hover between 1.00 and 1.15 unit a reboot it, when this cycle starts again. I have also performed a soft reset, but alas.

Am I experiencing slowdowns under these loads? No... and I could just be looking for trouble where there is none, but in my mind a 25x increase in hub load with few jobs scheduled that will not subside seems like an issue to me. I do not want to diminish the hub life or make it work harder than it should be.

@gopher.ny has been graciously talking to me off-line about this'd I have appreciated it, so here some more info that might be helpful.

I am finally getting to a place where I have built my automation where I just want to live in my smart home and let the hub run for a while, so even through I love HE, its a little frustrating. I appreciate everyones time.

I can understand that would be a frustrating journey. Good to hear you have been getting the personal support many have enjoyed from the support team. Also good to see you reach out to the second-tier support (us  ). I would also want to understand the elevated CPU usage myself, I have been doing something similar on my old C-4 as I transition to my newer C-7's.

). I would also want to understand the elevated CPU usage myself, I have been doing something similar on my old C-4 as I transition to my newer C-7's.

It's rare, but not impossible, that the issues lie with built-in apps. So I would suggest forgoing the convenience of some Community apps for a period of time to try and understand those which may be causing your elevated load. If that doesn't resolve the issue, then.... hmmm..... Anyone...? That will be one for support for sure....

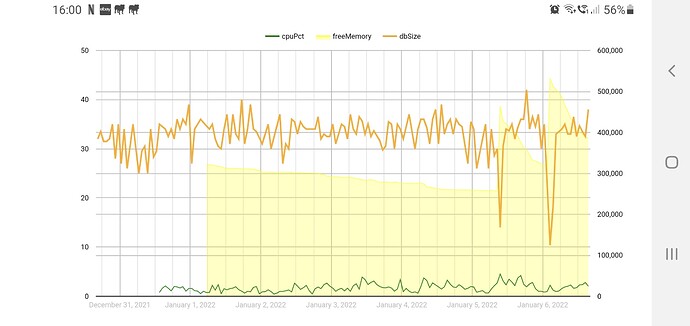

Sounds like you are on the right track with this, and with @gopher.ny assisting you it's only a matter of time before you find a solution. I'm sure you're looking at these already, but my usual checks for clues to cpu load are:

- amount of http traffic

- free memory

- DB size

@sburke781 Thanks! Yes, now that I have adjusted all state and history sizes to 11 across the board, and updated he polling of my thermostat suite to 1 minute as suggested, we will see how this plays out. As you suggest, my next step will be booting to safe mode and just running there for a while.

@thebearmay I am using this link for processor history. http://yourhubhere/hub/advanced/freeOSMemoryHistory. Is the number after the time stamp free memory? If so, I do se a decline. For example, at reboot I was at 662860, 9 hours later I was at 507856.

At the last time I rebooted, I was at about 376040. I do have logging for almost everything I do turned on, could excessive logging be causing a memory drain?

I would think the two likely candidates would be apps / drivers that either produce excessive Events (DB writes) or long-running HTTP calls, as (someone) suggested.

Correct, first number after the timestamp is free memory, 2nd number is cpu 5 minute average load

Making sure I’m correct here. Having lots of logging on rules enabled would eat up more available memory than having it all turned off?

I have logging turned on for pretty much everything, so I’m starting to think now that I see declining available memory, this is playing a part…

Logging will eat a little, but generally not enough to cause concern unless you see the DB start quickly growing at a large rate.

I have very little logging now and as you can see memory drops off and dB raises quickly after a reboot and normally levels off fairly quickly

I reboot every 2 weeks unless an update or problem

That's not a definitive reflection one way or the other on whether logging could cause excessive memory consumption, there are many variables that could contribute to that....

11 used to be the lowest recommended because setting it lower would cause a cleanup to happen after each event, but this is no longer the case. For things with a large number of states/events like Echo Speaks devices, I set both to 1; RGBW lights I set to 5 for no real reason; and sensors (motion, contact, presense, temp) are variable depending on if I'm actually going to want to look at the info.

Whenever I have had high CPU warnings, the free memory has been below 260,000, so I have set a notification for free memory below this amount. It seems that the high CPU in my case at least is from the system trying to free up memory.

Gosh, that was said nicely.

I have a few motion sensors that double as Lux sensors which report data non stop. Don’t really care about the history, just that it turns lights on at the right time.

Do you have history numbers you use for things like that?

Most things like that, where I don't really care, but might want a few readings just in case, I just set to 5. I would imagine that it isn't going to make much difference on something with only a few events/states to keep in the database versus things like Echo Speaks, or a weather app.

When did that change?

2.2.8

So I rebooted last night at 1:21AM with available memory of 662860

As of 6:45PM tonight (less than 24 hours) I have available memory of 464700

Every minute report out shows it decrease. I don't know enough to know how normal this is.