Have you tried using the built-in Chromecast Integration?

Yes, I have that installed as well. (also tried to port in the cast-web, work in progress).

For the Chromecast integration, it works for TTS on my Minis, Display, and chromecasts. TTS does not work on my Google Home for some reason.

However not sure how to play a Spotify URL. I'll play around with it. I assume spotify URI will not work though.

Also tried Logitech Media, not working.

If all else fails, try Echo and Alexa devices/tabs

But for this integration (Google Home Relay), might prove handy for those hard to intergrate devices that Google assistant has.

I skimmed through this thread and haven’t been able to find and answer to my question. Does assistant relay allow for sending a text notification to google assistant or does it only speak messages through on of their speakers?

Sure here is the node server. Just backup your assistant.js file and replace with this. Fair warning I take no responsibility if something breaks. Towards the end of the file are a few lines you need to modify.

var heUri = '/apps/api/2832/trigger/setGlobalVariable=test2:' + gaResponse + '?access_token=461f117c-95ad-4d52-8136-12345678789'

this line needs to be modified with your accesstoken and the name of the global variable you want to set. Also the app id for your RM trigger.

Also just below this line you need to set the ip of your hubitat hub.

Let me know how you get on ![]()

const async = require('async');

const GoogleAssistant = require('google-assistant');

const FileReader = require('wav').Reader;

const FileWriter = require('wav').FileWriter;

//const wavFileInfo = require('wav-file-info');

const terminalImage = require('terminal-image');

const path = require('path');

const fs = require("fs");

var tou8 = require('buffer-to-uint8array');

var http = require("http");

const defaultAudio = false;

let returnAudio;

let gConfig;

var playbackWriter = new FileWriter('server/playback.wav', {

sampleRate: 24000,

channels: 1

});

const inputFiles = [

`${path.resolve(__dirname, 'broadcast.wav')}`,

`${path.resolve(__dirname, 'response.wav')}`

];

var self = module.exports = {

setupAssistant: function() {

return new Promise((resolve, reject) => {

let users = []

async.forEachOfLimit(global.config.users, 1, function(i, k, cb){

let auth = i;

users.push(k)

global.config.assistants[k] = new GoogleAssistant(i)

let assistant = global.config.assistants[k];

assistant.on('ready', () => cb());

assistant.on('error', (e) => {

console.log(`❌ Assistant Error when activating user ${k}. Trying next user ❌ \n`);

return cb();

})

}, function(err){

if(err) return reject(err.message);

(async() => {

console.log(await terminalImage.file('./icon.png'))

console.log(`Assistant Relay is now setup and running for${users.map(u => ` ${u}`)} \n`)

console.log(`You can now visit ${global.config.baseUrl} in a browser, or send POST requests to it`);

})();

if (!global.config.muteStartup) {

self.sendTextInput('broadcast Assistant Relay is now setup and running')

}

resolve()

})

})

},

sendTextInput: function(text, n, converse) {

return new Promise((resolve, reject) => {

console.log(`Received command ${text} \n`);

if(converse) returnAudio = true;

// set the conversation query to text

global.config.conversation.textQuery = text;

const assistant = self.setUser(n)

assistant.start(global.config.conversation, (conversation) => {

return self.startConversation(conversation)

.then((data) => {

resolve(data)

})

.catch((err) => {

reject(err)

})

});

})

},

sendAudioInput: function() {

let raw = []

const assistant = self.setUser('greg');

fs.readFile(`${path.resolve(__dirname, 'response.wav')}`, (err, file) => {

if(err) console.log(err)

assistant.start(global.config.conversation, (conversation) => {

return self.startConversation(conversation, file)

.then((data) => {

console.log(data)

//resolve(data)

})

.catch((err) => {

console.log(err)

//reject(err)

})

});

});

},

startConversation: function(conversation, file) {

let response = {};

const fileStream = self.outputFileStream();

return new Promise((resolve, reject) => {

conversation.write(file)

conversation

.on('audio-data', data => {

fileStream.write(data)

// set a random parameter on audio url to prevent caching

response.audio = `http://${global.config.baseUrl}/audio?v=${Math.floor(Math.random() * 100)}`

})

.on('response', (text) => {

if (text) { sendReq(text)

console.log(`Google Assistant: ${text} \n`)

response.response = text;

if(returnAudio) {

self.sendTextInput(`broadcast ${text}`, null, gConfig);

returnAudio = false;

}

}

//dit it here

})

.on('end-of-utterance', () => {

console.log("Done speaking")

})

.on('transcription', (data) => {

console.log(data)

})

.on('volume-percent', percent => {

console.log(`Volume has been set to ${percent} \n`)

response.volume = `New Volume Percent is ${percent}`;

})

.on('device-action', action => {

console.log(`Device Action: ${action} \n`)

response.action = `Device Action is ${action}`;

})

.on('ended', (error, continueConversation) => {

if (error) {

console.log('Conversation Ended Error:', error);

response.success = false;

reject(response)

} else if (continueConversation) {

response.success = true;

console.log('Continue the conversation... somehow \n');

conversation.end();

resolve();

} else {

response.success = true;

console.log('Conversation Complete \n');

fileStream.end()

//self.joinAudio();

conversation.end();

resolve(response);

}

})

.on('error', (error) => {

console.log(`Something went wrong: ${error}`)

response.success = false;

response.error = error;

reject(response)

});

})

}

,

setUser: function(n) {

// set default assistant to first user

assistant = Object.keys(global.config.assistants)[0];

assistant = global.config.assistants[`${assistant}`];

// check to see if user passed exists

if(n) {

const users = Object.keys(global.config.users);

if(!users.includes(n.toLowerCase())) {

console.log(`User not found, using ${Object.keys(global.config.assistants)[0]} \n`)

} else {

n = n.toLowerCase();

console.log(`User specified was ${n} \n`)

assistant = global.config.assistants[`${n}`]

}

} else {

console.log(`No user specified, using ${Object.keys(global.config.assistants)[0]} \n`)

}

return assistant;

},

outputFileStream: function() {

return new FileWriter(path.resolve(__dirname, 'response.wav'), {

sampleRate: global.config.conversation.audio.sampleRateOut,

channels: 1

});

},

joinAudio: function() {

if(!inputFiles.length) {

playbackWriter.end("done");

return;

}

currentFile = inputFiles.shift()

let stream = fs.createReadStream(currentFile);

stream.pipe(playbackWriter, {end: false});

stream.on('end', () => {

console.log(currentFile, "appended")

self.joinAudio()

});

},

}

function sendReq(text){

//console.log (text)

var gaResponse = text.replace(/\?/g, "")

var gaResponse = gaResponse.replace(/\:/g, "")

var heUri = '/apps/api/2832/trigger/setGlobalVariable=test2:' + gaResponse + '?access_token=461f117c-95ad-4d52-8136-12345678789'

var uriencode = encodeURI(heUri)

console.log ("uri: " + uriencode)

var options = {

hostname: '192.168.1.129',

//port: 80,

path: uriencode,

method: 'GET',

headers: {

'Content-Type': 'application/xml',

}

};

var req = http.request(options, function(res) {

console.log('Status: ' + res.statusCode);

console.log('Headers: ' + JSON.stringify(res.headers));

res.setEncoding('utf8');

res.on('data', function (body) {

console.log('Body: ' + body);

});

});

req.on('error', function(e) {

console.log('problem with request: ' + e.message);

});

req.end();

}

Not sure what you’re asking? You can use assistant-relay to perform Broadcast TTS on your Google Home Devices. You can also use Custom Commands using the [CC] prefix.

Not sure what you mean by “sending a text notification”? Do you mean use it for Push Notifications to your phone? If so, not that I am aware of.

If you want to send a push notification to your phone, there is the Join implementation that I currently use. Or you can use theIFTTT notification setup.

@ogiewon Up to now I've been running v1 of Assistant Relay with no issues. I'm rebuilding my node.js server on a newer Mac capable of newer versions of Xcode and therefor, newer versions of node.

Can you confirm, is 8.11.3 the newest version of node.js I can use or are there newer versions still that are also compatible with Assistant Relay?

Will I gain any advantage with Assistant Relay v2 or just a lot of pain in the installation and setup? I know you've explained this before. Sorry, but I cannot find where you answered that for me.

Great questions... as far as I know, you must use Node v8.x.x. I am not aware of anyone using Node 9 or 10 successfully.

I would recommend switching to Assistant-Relay v2, as that version is receiving Greg Hesp’s attention these days. You might even be able to simply change the driver on Hubitat from my v1 to my v2 driver to prevent having to create a new device and therefore modify all of your automations. This is just a guess, as I have never tried it. It should work though, since the only real difference is the format for the http requests to the NodeJS AR server.

Perfect. Thanks so much Dan!

Thanks! I got this installed on my Raspberry Pi and it is working great.

I wonder how I can issue a custom command to Google Assistant, as I was speaking "Ok google, play some music". Tried to to this with [CC] and [CCC] prefixes but I get no response.

TTS works fine.

I would love to have Google play a random music when I arrive home!

That's because the command you are issuing it to is the Assistant Relay server. Not one of your Google Home speakers. If you issue that command to a Google Home speaker, that speaker is the one that starts playing, correct?

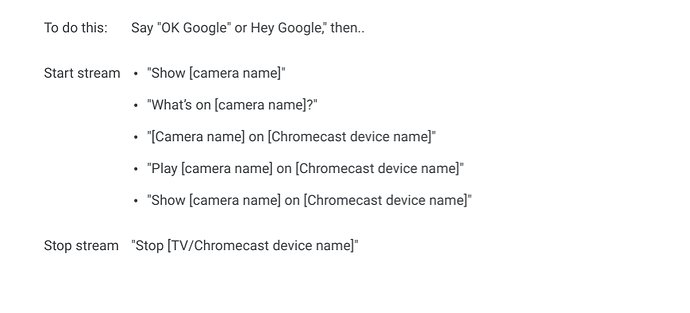

I haven't used this driver in a loooong time but wanted to play around with it and see what was new. These custom commands are great! I can now send my nest cams to my nest hubs when HE receives motion!

- [CC]show front door camera on living room display

Unfortunately, "[CC]stop living room display" doesn't seem to work. Anybody find a command that stops the stream?

Thanks

I believe the correct command would be "turn off" not stop, but I can't seem to get it to work either.

I know this isn't a great option, but I believe the stop command from the Chromecast Beta implementation works correctly still. I'm having the same problem with Cast-web-api at the moment. I think google did something with the stop command.

Yeah, I know....it works when you say it to your GH but for some reason the Assistant SDK doesn't like "stop" anymore. I don't know why, I just know it's affecting Cast-Web-API as well, which makes me think it's not just an AR problem but a Assistant SDK problem. But i could be totally wrong...it's just an idea.

I was going to suggest the stop command also, but I'm not using the Chromecast integration at this time and didn't know if you were either.

Okay...i don't know what I am doing wrong but I cannot get PM2 to work. I ran the assistant-relay installer fine but after I run sudo npm install pm2 -g install and the try to do pm2 startup, i get -bash: pm2: command not found. I am following @ogiewon's instructions to the letter and it just will not work.

UDPATE: Never mind, it's Cast-web-API-CLI screwing with it. It uses PM2 for it's new native process management. Unfortunately that locks out PM2 for anything else to use! Lovely. UGH!

As @Ryan780 said, something changed with the Assistant SDK. I used to be able to use [CC] stop streaming, but that won’t work from Google Assistant Relay or Cast Web API now. But I can confirm that the Chromecast Integration (beta) does indeed stop the stream with the custom action stop()

I said I suspected that....and only after he reported having the same problem in Assistant Relay that I'm having with cast-web-api's stop command. But, if you read the whole post I said i might be completely wrong. Please don't quote me so inaccurately.