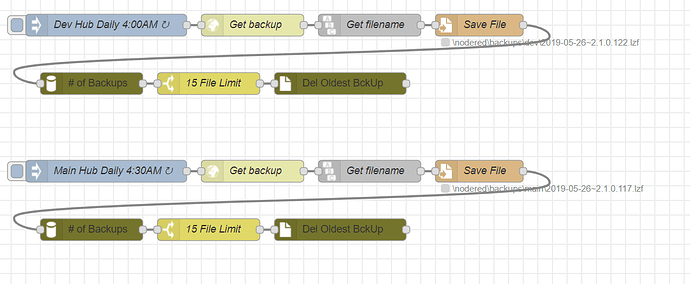

Sure. Keep in mind that this is exported from my Windows instance of NR so any nodes that reference file location would need to follow Linux formatting (assuming you are using a Linux distro for you NR). Basically flip the slashes as necessary and put the actual folders you plan to store the backups in.

[

{

"id": "31f80583.137ffa",

"type": "file",

"z": "1a25485a.160c68",

"name": "Save File",

"filename": "",

"appendNewline": false,

"createDir": true,

"overwriteFile": "true",

"encoding": "none",

"x": 660,

"y": 40,

"wires": [

[

"fcae276c.cfdc28"

]

]

},

{

"id": "c916945e.2a3d68",

"type": "inject",

"z": "1a25485a.160c68",

"name": "Dev Hub Daily 4:00AM",

"topic": "",

"payload": "",

"payloadType": "date",

"repeat": "",

"crontab": "00 04 * * *",

"once": false,

"onceDelay": 0.1,

"x": 150,

"y": 40,

"wires": [

[

"67731afb.2979e4"

]

]

},

{

"id": "67731afb.2979e4",

"type": "http request",

"z": "1a25485a.160c68",

"name": "Get backup",

"method": "GET",

"ret": "bin",

"paytoqs": false,

"url": "http://192.168.7.1.111/hub/backupDB?fileName=latest",

"tls": "",

"proxy": "",

"authType": "basic",

"x": 350,

"y": 40,

"wires": [

[

"8eadb6f5.01e168"

]

]

},

{

"id": "8eadb6f5.01e168",

"type": "string",

"z": "1a25485a.160c68",

"name": "Get filename",

"methods": [

{

"name": "strip",

"params": [

{

"type": "str",

"value": "attachment; filename="

}

]

},

{

"name": "prepend",

"params": [

{

"type": "str",

"value": "\\\\nodered\\\\backups\\\\dev\\\\"

}

]

}

],

"prop": "headers.content-disposition",

"propout": "filename",

"object": "msg",

"objectout": "msg",

"x": 510,

"y": 40,

"wires": [

[

"31f80583.137ffa"

]

]

},

{

"id": "fcae276c.cfdc28",

"type": "fs-ops-dir",

"z": "1a25485a.160c68",

"name": "# of Backups",

"path": "\\nodered\\backups\\dev\\",

"pathType": "str",

"filter": "*",

"filterType": "str",

"dir": "files",

"dirType": "msg",

"x": 130,

"y": 120,

"wires": [

[

"6a93c3f4.90cd5c"

]

]

},

{

"id": "6fc01da3.081d04",

"type": "fs-ops-delete",

"z": "1a25485a.160c68",

"name": "Del Oldest BckUp",

"path": "\\nodered\\backups\\dev\\",

"pathType": "str",

"filename": "files[0]",

"filenameType": "msg",

"x": 470,

"y": 120,

"wires": [

[]

]

},

{

"id": "6a93c3f4.90cd5c",

"type": "switch",

"z": "1a25485a.160c68",

"name": "15 File Limit",

"property": "files.length",

"propertyType": "msg",

"rules": [

{

"t": "gte",

"v": "15",

"vt": "num"

}

],

"checkall": "true",

"repair": false,

"outputs": 1,

"x": 290,

"y": 120,

"wires": [

[

"6fc01da3.081d04"

]

]

}

]

@cuboy29, I forgot to add that you would need these nodes installed as well:

node-red-contrib-fs-ops

node-red-contrib-string