I have a cronjob that runs nightly at 3am to do a hub backup via curl. This absolutely clobbers the Hubitat (stuff stops responding when the backups is happening). Any way we could adjust the priority of the backup job to not clobber everything else?

I don't know what is up with backups, but 3 times in the past week or so my zigbee radio has gone offline as soon as the backup completed. The last time it happened I was able to turn the radio off and then back on and all was fine, but it is concerning.

Same here last two mornings. Zigbee network is locked. Looking at Zigbee virtual switch that collects timings it start having problems at 3:00AM and never recovers. Had to reboot both days to get back to normal. Frustrating.......

This has been asked several different times here on the forum.

Well, seems like we need to keep asking. Lol.

I didn’t realize there was maintenance at 2am.  so if that’s taking a significant amount of time, I wonder if it’s running into my backup script.

so if that’s taking a significant amount of time, I wonder if it’s running into my backup script.

to download the latest backup? Yes, don't run that until at least 4. Mine runs at 5.

You think that will change the answer? You must not have been here for very long. ![]()

Not everyone has been in a friendly conversation with Bruce ![]()

I'm going to be a good boy and I'm not going to touch that one with a 10 foot pole. It's a fast ball right over the center of the plate that is just begging me to take a big whack at it....but I will resist. ![]()

I have been having this happen with backups that I run manually during the day. It just seems to affect the ZigBee network. I can still access the web UI and z-wave devices. I don't know about the nightly backup since I have taken to implementing @Ryan780 (I think that's who posted it) RM example and rebooting the hub at 4 am every morning.

I do not do that. The only time my hub reboot is after an update or if i have to cause it's locked. Which, knock on wood, has not happened in months.

I guess the question I would have is, what else are you doing while performing the backup during the day? The reason it runs at night is because that is the less busy time of day (for most people) but even while the maintenance is running, my hub still works...just a lot slower. I don't loose my zigbee radio. How are you creating this backup?

Sorry, It was actually @waynespringer79 example.

I just do it the normal way and download it to the computer. Generally, I try to do it when there aren't automations running, since the first time it happened I thought a mode change may have flummoxed it.

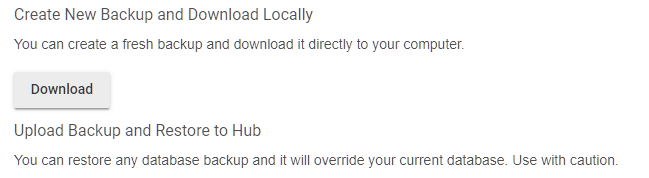

This is creating a new backup and downloading it. You can also just download the last backup that was created during maintenance the night before as well.

I like to do a backup if I have made changes to rules, which I have been doing a lot of lately to try to eliminate anything that may be causing an issue. The hub has been solid otherwise, but does start slowing down after 2 or 3 days. I stripped out everything that was custom or suspect and started adding things back very, very slowly, but started seeing this happen when I was still only using built-in apps, so I gave up and went with scheduled reboots, because I don't have time for troubleshooting this anymore. There must be something about the setup, but it takes several days to be noticable, and I have had zero errors in the logs outside the occasional Alexa skill errors.

How do you grab previous night backup? My script is generating a backup and downloading it...

I had the same problem. Someone else, I forget who, gave me the correct curl command to use:

curl http://HUB_IP/hub/backup | grep data-fileName | grep download | sed 's/<td class=\"mdl-data-table__cell--non-numeric\"><a class=\"download mdl-button mdl-js-button mdl-button--raised mdl-js-ripple-effect\" href=\"#\" data-fileName=\"/\ /' | sed 's/\">Download<\/a><\/td>/\ /' | sed 's/ //g' | tail -1 | xargs -I @ curl -o @ http://HUB_IP/hub/backupDB?fileName=@

Just replace HUB_IP with your hub's ip. That should pull the last backup file created without making a new one.

Hmm, that's throwing an unterminated quote error (at least in macOS).

Here's what I've got currently:

DATE=date '+%m%d%y-%H%M%S'; curl -s http://HUB_IP/hub/backupDB?fileName=latest --output /mnt/backups/hubitat/$DATE.lzf

Maybe that isn't generating a new backup... but it sure seems like it might be (it takes a while)

@Ryan780 Found your script here. Seems to work great.

https://raw.githubusercontent.com/ryancasler/Hubitat_Ryan/master/scripts/HE_backup.sh

Just modified so it doesn't nuke all but last 3 backups. =) (modifying the "head -n -3" to "head -n -30"). I have things storing to my NAS, so I'd rather err toward lots of backups rather than worrying about space. =)

Is significantly faster than previous method. So clearly it's downloading latest backup and not generating a new one. Also adjusted to run at 5am... hopefully between those two, my hub will stop randomly dying in the middle of the night. Lastnight's backup was only 9KB, so it must have died before then (or the backup just timed out)

All i did was copy over the curl command. I don't know where you got what you linked to earlier.

Another forum thread was what i was using.

Not sure why what you’d pasted earlier was causing issues.

Meh, works now. Thanks.

Anyone notice 2.1.6 may have broken nightly backups? My script just continues to download the backup that was made on 2019-11-04...

Does the hub no longer do nightly maintenance/backup?