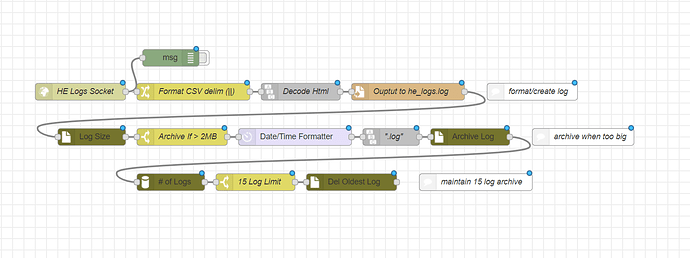

Quick node by node breakdown

- pulls data from the logsocket

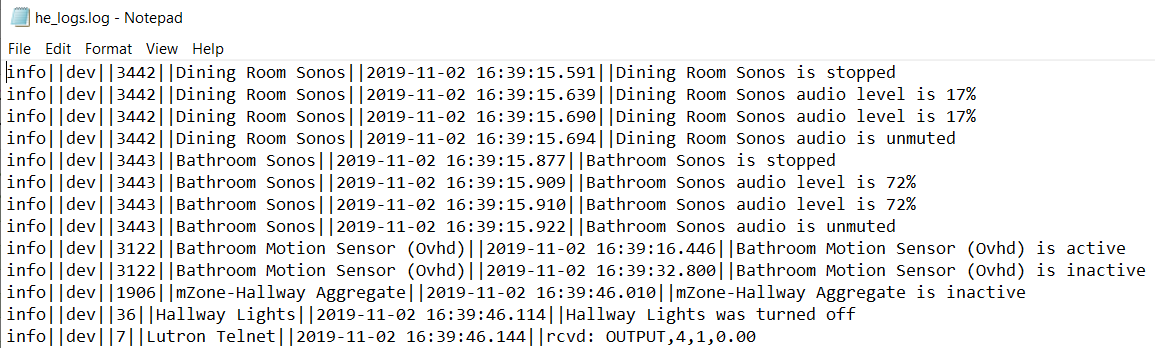

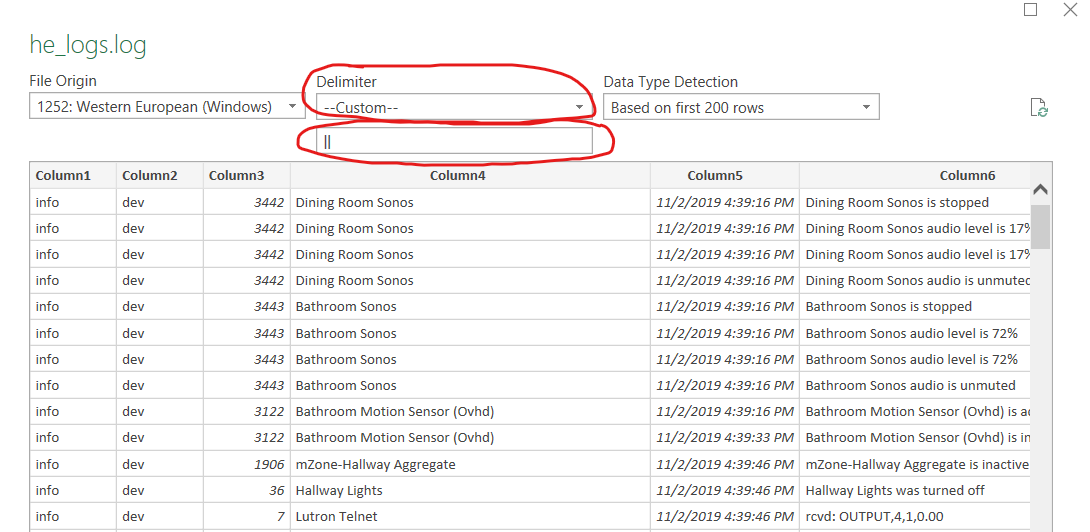

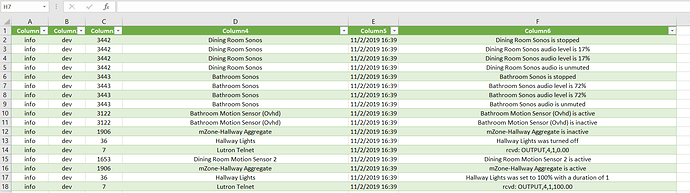

- extracts the data I wanted and converts to delimited text file (I chose ||| as this shouldn't appear anywhere in the logs normally)

- fixes anomalies that occurred when logs contains special characters or actual html.

- saves the log file to location of choice

5 - 9. sets the maximum size of log files, when reached, archives and created a new log file

10-12. sets maximum number of logs files to keep and deletes the oldest.

Log size and number of logs can be easily changed to what you need.

Below is my exported flow for logging. You will need to point the first node to the logsocket you created and replace the log path and archive path with your specific locations.

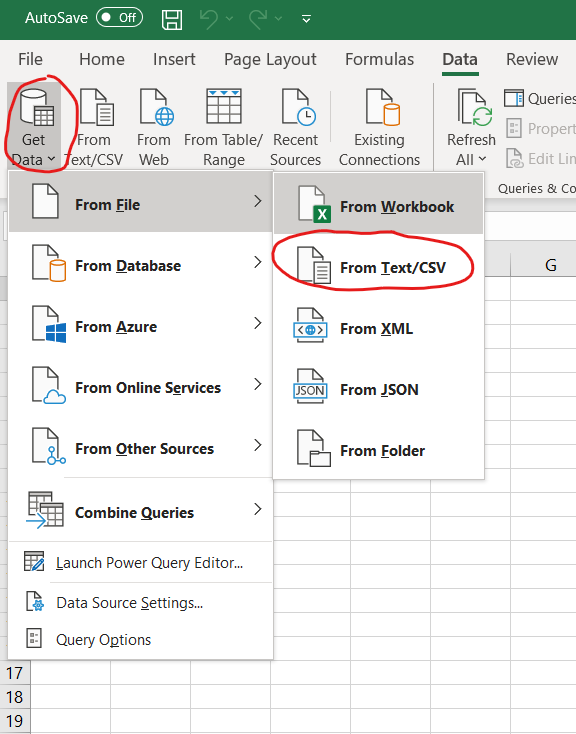

Excel details to come later.

[

{

"id": "9c740a.039f3bf8",

"type": "file",

"z": "43aea04d.3bd6e",

"name": "Ouptut to he_logs.log",

"filename": "/yourPath/he_logs.log",

"appendNewline": true,

"createDir": false,

"overwriteFile": "false",

"encoding": "none",

"x": 820,

"y": 260,

"wires": [

[

"6b7bba59.42d0c4"

]

]

},

{

"id": "6b7bba59.42d0c4",

"type": "fs-ops-size",

"z": "43aea04d.3bd6e",

"name": "Log Size",

"path": "/yourPath",

"pathType": "str",

"filename": "he_logs.log",

"filenameType": "str",

"size": "filesize",

"sizeType": "msg",

"x": 260,

"y": 340,

"wires": [

[

"b0409461.ec2518"

]

]

},

{

"id": "cd31194d.e094c8",

"type": "fs-ops-move",

"z": "43aea04d.3bd6e",

"name": "Archive Log",

"sourcePath": "/yourPath",

"sourcePathType": "str",

"sourceFilename": "he_logs.log",

"sourceFilenameType": "str",

"destPath": "/yourArchivePath",

"destPathType": "str",

"destFilename": "newname",

"destFilenameType": "msg",

"link": false,

"x": 930,

"y": 340,

"wires": [

[

"da05758.c7ae488"

]

]

},

{

"id": "da05758.c7ae488",

"type": "fs-ops-dir",

"z": "43aea04d.3bd6e",

"name": "# of Logs",

"path": "/home/stephack/backup/nodered/main/logs/log_archive",

"pathType": "str",

"filter": "*",

"filterType": "str",

"dir": "files",

"dirType": "msg",

"x": 400,

"y": 420,

"wires": [

[

"73b6cf78.d6a33"

]

]

},

{

"id": "7d3a757b.d97bdc",

"type": "fs-ops-delete",

"z": "43aea04d.3bd6e",

"name": "Del Oldest Log",

"path": "/yourArchivePath",

"pathType": "str",

"filename": "files[0]",

"filenameType": "msg",

"x": 720,

"y": 420,

"wires": [

[]

]

},

{

"id": "ff5df71f.907cc8",

"type": "moment",

"z": "43aea04d.3bd6e",

"name": "",

"topic": "",

"input": "timestamp",

"inputType": "msg",

"inTz": "America/New_York",

"adjAmount": 0,

"adjType": "days",

"adjDir": "add",

"format": "YYYY-MM-DD_hh-mm-ss",

"locale": "en_US",

"output": "newname",

"outputType": "msg",

"outTz": "America/New_York",

"x": 620,

"y": 340,

"wires": [

[

"7fd311fb.e9d21"

]

]

},

{

"id": "7fd311fb.e9d21",

"type": "string",

"z": "43aea04d.3bd6e",

"name": "\".log\"",

"methods": [

{

"name": "append",

"params": [

{

"type": "str",

"value": ".log"

}

]

}

],

"prop": "newname",

"propout": "newname",

"object": "msg",

"objectout": "msg",

"x": 790,

"y": 340,

"wires": [

[

"cd31194d.e094c8"

]

]

},

{

"id": "b0409461.ec2518",

"type": "switch",

"z": "43aea04d.3bd6e",

"name": "Archive If > 2MB",

"property": "filesize",

"propertyType": "msg",

"rules": [

{

"t": "gt",

"v": "2000000",

"vt": "num"

}

],

"checkall": "true",

"repair": false,

"outputs": 1,

"x": 420,

"y": 340,

"wires": [

[

"ff5df71f.907cc8"

]

]

},

{

"id": "73b6cf78.d6a33",

"type": "switch",

"z": "43aea04d.3bd6e",

"name": "15 Log Limit",

"property": "files.length",

"propertyType": "msg",

"rules": [

{

"t": "gte",

"v": "15",

"vt": "num"

}

],

"checkall": "true",

"repair": false,

"outputs": 1,

"x": 550,

"y": 420,

"wires": [

[

"7d3a757b.d97bdc"

]

]

},

{

"id": "62fe0342.ad756c",

"type": "comment",

"z": "43aea04d.3bd6e",

"name": "format/create log",

"info": "",

"x": 1040,

"y": 260,

"wires": []

},

{

"id": "eb6371f4.6c077",

"type": "comment",

"z": "43aea04d.3bd6e",

"name": "archive when too big",

"info": "",

"x": 1130,

"y": 340,

"wires": []

},

{

"id": "90358433.c12f58",

"type": "comment",

"z": "43aea04d.3bd6e",

"name": "maintain 15 log archive",

"info": "",

"x": 940,

"y": 420,

"wires": []

},

{

"id": "907fadd6.c24b8",

"type": "change",

"z": "43aea04d.3bd6e",

"name": "Format CSV delim (||) ",

"rules": [

{

"t": "set",

"p": "payload",

"pt": "msg",

"to": "msg.level & '||' & msg.type & '||' & msg.id & '||' & msg.name & '||' & msg.time & '||' & msg.msg\t",

"tot": "jsonata"

}

],

"action": "",

"property": "",

"from": "",

"to": "",

"reg": false,

"x": 440,

"y": 260,

"wires": [

[

"5989b2b4.2dacec"

]

]

},

{

"id": "5989b2b4.2dacec",

"type": "string",

"z": "43aea04d.3bd6e",

"name": "Decode Html",

"methods": [

{

"name": "decodeHTMLEntities",

"params": []

}

],

"prop": "payload",

"propout": "payload",

"object": "msg",

"objectout": "msg",

"x": 630,

"y": 260,

"wires": [

[

"9c740a.039f3bf8"

]

]

},

{

"id": "53189136.8abce",

"type": "debug",

"z": "43aea04d.3bd6e",

"name": "",

"active": false,

"tosidebar": true,

"console": false,

"tostatus": false,

"complete": "true",

"targetType": "full",

"x": 400,

"y": 200,

"wires": []

},

{

"id": "c0527395.c6582",

"type": "websocket in",

"z": "43aea04d.3bd6e",

"name": "HE Logs Socket",

"server": "",

"client": "b2f3490e.fc20e8",

"x": 240,

"y": 260,

"wires": [

[

"907fadd6.c24b8",

"53189136.8abce"

]

]

},

{

"id": "b2f3490e.fc20e8",

"type": "websocket-client",

"z": "",

"path": "ws://192.168.1.111/logsocket",

"tls": "",

"wholemsg": "true"

}

]