This proposal is for a standardized, user adjustable, battery percentage reporting algorithm, to be used with all battery powered devices supported by HE. For existing drivers the updated logic would be added as time permits. Consider this a starting point, feel free to improve it.

Why

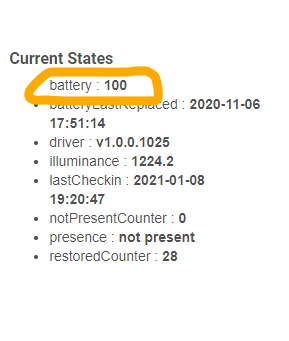

As many of us know, battery percentage is perhaps the most arbitrary unreliable piece of device information, yet almost all of us rely upon it to keep our battery powered devices up and running. For example: I have a (non critical) contact sensor working at 0% or the past 3 months, and conversely many of us, including me, had devices fail at 25% and higher.

Features:

-

Display the device's reported event voltage in a device "Current States" as battery_volts, and in the event message.

battery_volts: 5.6

"Iris V3 Keypad battery was 71% 5.6 volts" -

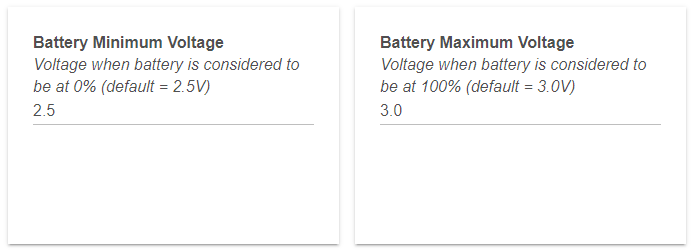

User adjustable Max and Min voltage fields allow for devices that don't conform to pre defined norms, standards, or manufacturer specifications

-

Battery type selection: Alkaline, Lithium, Rechargeable, SilverOxide. When changed, Max and Min voltage values are modified

Example of a standardized percentage formula taken from a keypad driver supporting multiple device types, reporting voltages 3 or 6 volts

results = createEvent(getBatteryResult(Integer.parseInt(descMap.value, 16)))

private getBatteryResult(rawValue) {

def linkText = getLinkText(device)

def result = [name: 'battery']

def volts = rawValue / 10

def excessVolts=3.5

def maxVolts=3.0

def minVolts=2.5

def batteries = 2

if (device.data.model.substring(0,3)!='340') //UEI and Iris V3 use 4AA batteries, 6volts

batteries = 4

switch (BatteryType)

{

case "Rechargeable":

excessVolts = (1.35 * batteries)

maxVolts = (1.2 * batteries)

minVolts = (1.0 * batteries)

break

case "Lithium":

excessVolts = (1.8 * batteries)

maxVolts = (1.7 * batteries)

minVolts = (1.1 * batteries)

break

default: //assumes alkaline

excessVolts=(1.75 * batteries)

maxVolts= (1.5 * batteries)

minVolts=(1.25 * batteries)

break

}

if (volts > excessVolts)

{

result.descriptionText = "${linkText} battery voltage: $volts, exceeds max voltage: $excessVolts"

result.value = Math.round(((volts * 100) / maxVolts))

}

else

{

def pct = (volts - minVolts) / (maxVolts - minVolts)

result.value = Math.min(100, Math.round(pct * 100))

result.descriptionText = "${linkText} battery was ${result.value}% $volts volts"

}

return result