I don't care what you call me, just don't call me late for dinner!!

Sadly I don't have that, looks messy but all its doing is outputting 1, and once we have 10, they are closed. If not, something isn't closed. Everything stops, and I'll be setting the mode back, so I can fix the issue, and run it again.

I do kind of miss my webCoRE for that, as that would tell me which had the issue.

But I want to try and keep everything in one place. My first run was last night, and it was rapid! So long winded, but works

Ya, it's a node in the HA pallet and once I learned about it I literally use it everywhere now. It could be a real useful addition to the HE pallet in the future.

My goodnight routine was always slow because it would send an off command to 30-40 lights, close command to all the garage doors, lock commands to 5 locks, alarm commands, led change commands, etc.. etc.. There'd be so much traffic on the zwave mesh it would take a solid minute to complete. With this node it checks everything against the state it should be in, creates a list of the ones that don't match and only sends the commands to those devices. My goodnight routine now completes in 5-10s.

Well, it would have been even faster than that if you checked the current state with the hubitat device nodes and then just sent commands to the needed nodes. As the device node always checks the cached value in node-red the only thing that would go to the hub is the actual needed off commands. Yes, it is a lot of nodes you have to put on the sheet though.

I actually find that vastly superior over the way the HA node does it. But I did use that node a lot when I was using HA more, and it is pretty convenient.

The same type of node, but just checking against the hubitat cache in node-red, might be interesting depending on how it worked / was designed.

I've been trying to think through suggestions to fblackburn on more access to the device/attribute cache... Still ruminating on it though.

Maybe something like a multi-device node that can accept an array of deviceId+attribute as an input, and output an array of deviceId, attribute name, attribute value that then could be used in other nodes via split or function node.

Yeah, I would have gone that way if I hadn't moved all my Zigbee devices off HE.

I've too many unsupported devices, and sick of them dropping constantly.

I'm now running all Zigbee through deCONZ, and all is surprisingly stable.

If the trade off is large flows, for stability, I'll take it

Curious why you find that superior than 1 node that does the same thing without having to place 100 nodes and a plate of spaghetti on your sheet lol

I did it that way initially for smaller automations like a double tap to turn on/off certain lights. I would check the state first then send only to those devices. But hadn't done it for the whole-house automations like goodnight and away.

For unknown reasons my simple flow stopped, with a lot of error messages (can submit later if needed but don't want to bother everybody with that without trying to solve myself the problem)

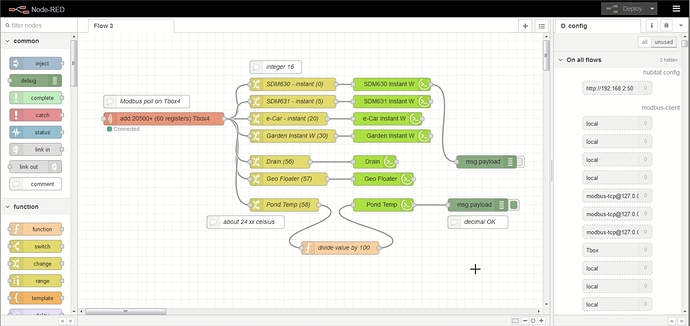

I noticed that my NR is full of tests I made before: MQTT (local mosquitto, cloud mqqt), also several modbus plc's I connected (I have 4 of them and harvested data from both of them).

I tried to remove all those servers without success.

I exported my latest "Hubitat OK" flow with few sensors on my 4th modbus plc. Exported current flow, all flows (bigger file).

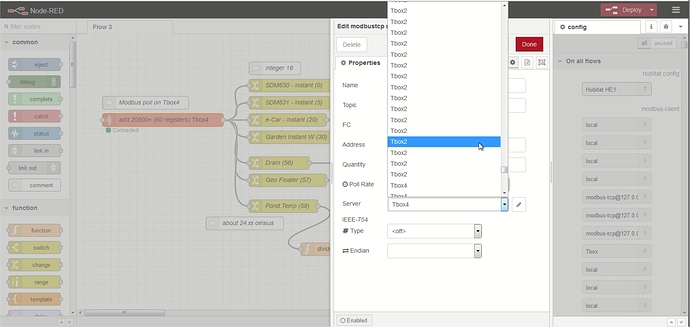

Tried several tests, reading node-red forum about similar errors: created new empty flow, imported the current flow, etc. Whatever I do, this damned flow always contains all the useless servers.

I exported the flow as text, erased all the useless servers, created a new flow and imported the cleaned text: no way, the servers are still there

When I tried to configure my modbus server (not needed, the parameters are correct), the list is full of duplicate...

I still don't know how to get rid of those useless servers and I reach my limit here.

Any idea ? I'm ready to erase everything in case of, but I doubt for now that this will even solve the problem...

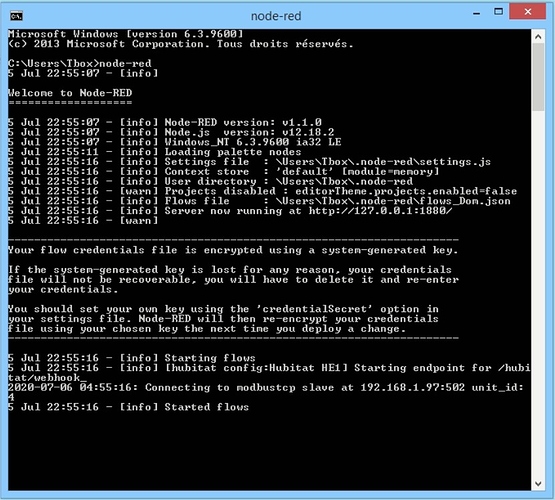

*Update: I installed a brand new node-red latest stable release (12.18.2) + modbusTCP + Node-red Hubitat on a HP Tablet running W8 (what I found on my desk for a quick re-start). And installed my little flow. Managed to change the IP address on Maker API. Managed to add the token on node-red.

All seems OK after 5 minutes.

Will see if everything is ok in few hours...

Superior from a data flow and impact on the hub/mesh standpoint, not simplicity to implement. I fully agree that the HA node is simpler to implement versus using individual device nodes.

In the hubitat nodes the values are all already cached in node-red, so are free to check - doesn't impact the hub or mesh networks.

So while it may be more complex to setup (takes a lot of hubitat device and switch nodes) it is less load on the hub to perform the checks.

But maybe we can think up a good way to make a somewhat equivalent node in the hubitat node-red node set. Needs some thought and mapping out first though.

Ahhhh they're cached. Yes this would be superior. Just more of a pain to setup when there's lots of devices. But only need to do that once then it's done.

As it is today - yes, it is cumbersome for large device counts.

Long term maybe we should look at making a new node to allow checking multiple devices at once. It would certainly be nice to get even more use out of those cached values! However it will take some thought before proceeding as it would require quite a bit of refactoring on the cache and code to support that versus the way it is done today (today it is a per-node cache, not a global cache).

Side note to others - you may not realize this, but since it is a per-node cache if you use the same device/attribute in multiple nodes on initialization it actually makes a call to the hub for each node to build the local device cache. So if you use device A, attribute B in 10 different nodes it makes 10 different calls to the hub to build the initial cache. After initialization, all nodes are updated by the same incoming event so no loading/penalty for reuse then.

Not a problem, per se, just something to keep in mind. Another way to think about it is for initial initialization more nodes = mode calls to hub = more time required to initialize. The calls are throttled, so not a hub loading issue, necessarily - but on large systems it explains why it can take tens of seconds to complete the initialization phase and start showing values.

Also, the cache only caches defined devices/attributes - it does not cache every event value that comes in. That is largely because the "data type" is not specified in the maker api event, since it takes an additional call to the hub to see data type that is only done for device attributes being used in a node.

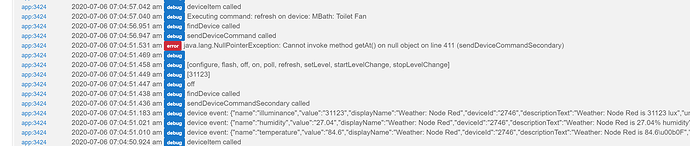

I get this error periodically on the same GE dimmer, Any thoughts?

Maker API Log:

Node Red error:

{"error":true,"type":"java.lang.Exception","message":"An unexpected error occurred."}

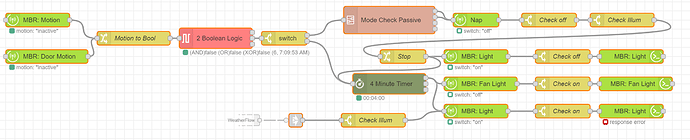

Flow:

I've never seen that, but I do have a ton of GE dimmers so maybe I can test/help.

What does the config of the "ON" "MBR Light" node look like?

[{"id":"19c6fbbd.d6e644","type":"hubitat command","z":"9688652a.2c0f68","name":"MBR: Light","server":"f17566a9.c052b8","deviceId":"29","command":"off","commandArgs":"","x":1390,"y":880,"wires":[]},{"id":"f17566a9.c052b8","type":"hubitat config","z":"","name":"","usetls":false,"host":"192.168.2.3","port":"80","appId":"3424","nodeRedServer":"http://192.168.2.9:1880","webhookPath":"/hubitat/webhook","autoRefresh":true,"useWebsocket":true}]

I should also say this is a Z-wave dimmer, not plus and one of the original switches I have ever bought, probably 3-4 years old...

Yeah, I don't have any of those.

Maybe turn on debug logging in Maker API and the device and see what the raw command that is trying to be written is?

If all else fails, delete the ON node in node-red and remake it and see if it does the same thing.

The maker API log was posted above  It was calling an off command to the switch. I did a refresh and all is better now... But it will come back. That is why it has befuddled me.

It was calling an off command to the switch. I did a refresh and all is better now... But it will come back. That is why it has befuddled me.

As I go down the rabbit hole, I think I have a Z-wave device acting up. I see off calls from Node-red in the maker API log, but the light switch is still on and no notations in the log for those being turned off. And it is 4 different light switches that this is randomly happening.

Any ideas what might be causing this?

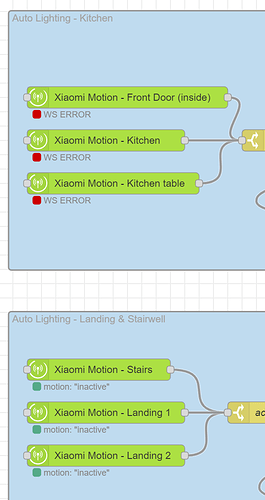

Only affecting some nodes.

I'm assuming its a websocket error.

But the websocket is working fine (it tests ok from a browser).

Weird.

Weird, can you post Node-RED log to see the error? log location depend on the Node-RED install type (docker, .deb, source, etc..)

Do you have more than one config node? (to explain different behavior, not the error) Or all your devices are bound to the same config node?

Same config node. Its on a Raspian OS Pi.

Off to bed now (really really late here!) will check logs tomorrow. Thank you!

Also......how to update the Hubitat nodes to get the new HSM one? I went into pallet management and can't find any option to update the nodes set. It just says they are already installed.