That was the general train of thought.. I was asking elsewhere about it and was told, why bother upgrading now lol

Agreed. I am sticking with my InfluxDB 1.x instance. No reason to upgrade IMHO. I run it as an add-on on my Home Assistant Yellow system, along with Grafana and Node-RED. HAOS makes running these containers trivial. I really don't use HA for much, except as a way to integrate a few devices with Hubitat that currently are not supported. The add-ons are a great feature.

Yeah, I'll be sticking with v2.x

You probably have a fair amount of time to not worry about it. As of right now it looks like V3 is only for a very select use case. There is no OSS version and their cloud option isn't there either. One thing I would point out though is that there is a decent chance you will need to go to 2 to get to v3 so that may be something to think about. That or just start from scratch.

Also I would point out as @jtp10181 has pointed out there is no need to worry about rewriting stuff for flux(V2). I upgraded my database earlier this year because of concerns since the older software hasn't received any updates in so long. If you use default configurations the upgrade is pretty straight forward. Then you just need to create the DBRP connection which is essentially one curl command to the server. You do need a few db values that are quickly obtained from the GUI that actually works in v2. Then you just create the Data source connection in InfluxDB.

I have it documented in this thread here, but I was directed to whole process by @jtp10181

Using HE with with InfluxDB, Grafana, with InfluxDB Logger -  Get Help / Integrations - Hubitat

Get Help / Integrations - Hubitat

It really isn't bad.

Hey folks, I've just had an oddity on one of my hubs which boiled down to something with InfluxDB Logger v2. Wanted to mention it in case it's of relevance to anybody else.

I have two hubs, both logging to the same InfluxDB. I upgraded "Hub 2" to 3.2.1 and then forgot to upgrade "Hub 1" which remained on 3.2.0. Wake up today to find "Hub 1" completely locked up, Zigbee network offline, severe load, yada, yada. Huge event queue for InfluxDB Logger.

I'm in the process of redoing stuff on my HE boxes anyway, so I didn't care. I just deleted InfluxDB Logger on both hubs, rebooted and the problem is gone. But should this have happened? Was it anything to do with the mismatched versions? When I rebuild things later should I avoid logging two hubs to the same InfluxDB (that would seem weird)?

Ta!

It is extreamly unlikely it is related to InfluxDB Logger. The most recent change was just about fixing a capability typo. All that would have done was enable devices that didn't previously work.

The only time I have seen a issue triggered by InfluxDB Logger is when I was testing the apps data protections when DB adds are failing. As the data protection adds more and more records it will gradually grow the DB. That will eventually cause other problems. But that as you can see that is more of a result of other failures.

The only way I can see a problem with two hubs is if you are using the advanced attribute features and the drivers for the devices involved include incompatible data for same named attributes. That isn't exactly a problem though with InfluxDB Logger, but the devices drivers involved.

I get a message in my logs for the app:

[app:528](http://REDACTED/logs?tab=past&deviceId=663#)2023-10-13 12:14:14.857 AM[error](http://REDACTED/logs?tab=past&deviceId=663#)java.lang.NullPointerException: Cannot invoke method getDisplayName() on null object on line 367 (method updated)

Nothing to worry about? Is it breaking logging?

I would guess you deleted a device which you had selected in the app, and now it is looking for it.

Possibly opening and closing the dropdown that the device was selected in and then saving will clear it out of the list the app has stored.

Version 3.3.0 has just been released.

This version adds support for publishing Hub Variables to InfluxDB. The following types are supported:

- Number

- Decimal

- String

- Boolean

Publishing of DateTime variables is not supported.

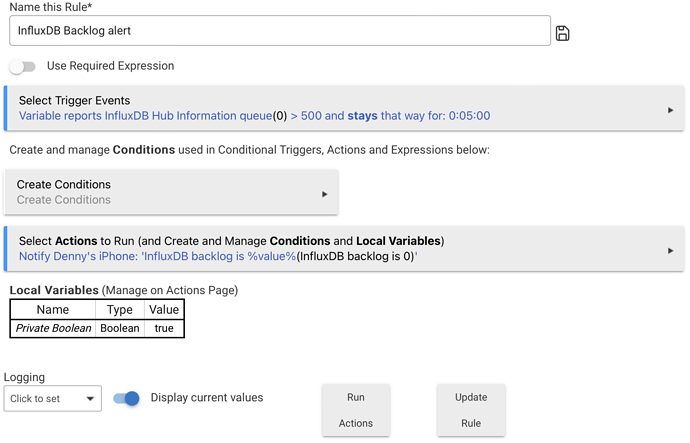

In addition, this version supports recording the outstanding InfluxDB queue size (length) in a global variable following each post. This allows the outstanding queue size to be used in other applications such as Rule Machine. An example use case, using a variable named "InfluxDB Hub Information queue", is show below.

I know I asked you for a step by step eons ago; here's a belated thank you. I finally got some time to set everything up on my synology. The detailed process was a great help.

Does anyone know why I can;'t change this setting in the app?

Record InfluxDB backlog size in hub variable

I'd like to be able to monitor the number of pending writes?

Thanks.

Maybe double check a hub variable of type « number » is defined in the Hub Variables page of Settings.

Thanks @hubitrep appreciated!

New to this app on HE and I have some questions about backlog. Like @brumster above, I am not able to change this in the app.

I see references to a hub variable, but I don't see that one was created for InfluxDB logger and I must be missing where/how I should do this manually. Can someone point me to provide me with some information on this?

I'm assuming that this would solve the issue of my InfluxDB being "off" for a period of time and the resulting missing data.

Go to the settings page, click on "Hub Variables". You can create a new variable here, then you can go back to the app and assign this variable to the setting.

Assigning a variable to track backlog size won't solve anything. However by assigning a hub variable as explained, you should be able to track backlog size (and assess whether there's a backlogging problem) by going to the Logs page and selecting the Location Events tab. Changes to hub variables are visible there.

IIRC the app's config page lets you select any hub variable defined as type "number" for this purpose. The name doesn't matter but you probably want to choose something recognizable for the purpose. Initial value doesn't matter, it will get overwritten every time the app's backlog size changes.

Thanks, I found it after I posted...

Ran into a strange issue today. Long story short, after 75 days of uptime (!) the hub somehow went into a bad state (multiple devices and apps generating LimitExceededException errors, and such) and I had to reboot. After reboot, things got back to normal except for InfluxDB Logger. I noticed I had over 15,000 entries in the backlog, even though the limit is set to 5,000 - not sure how that happened. I went in and set the backlog limit to 1 and the batch size to 250 and still the app wasn't able to consume the backlog. Check the app status and saw there were no scheduled jobs.

This error

Corresponds to the call to setGlobalVar() in the code

// Remove the post from the queue

state.loggerQueue = state.loggerQueue.drop(postCount)

loggerQueueSize = state.loggerQueue.size()

// Update queue size variable if in use

if (prefQueueSizeVariable) {

setGlobalVar(prefQueueSizeVariable, loggerQueueSize)

}

// Go again?

if (loggerQueueSize) {

runIn(1, writeQueuedDataToInfluxDb)

}

Maybe that setGlobalVar() call needs to be wrapped in a try/catch to ensure runIn() gets called ? I got rid of the queue size hub variable setting in the app config and now the backlog is getting cleared normally.

Not really sure what happened that led to this situation but I'll try to investigate further.

I have had similar issues. It is all about the hub getting in a bad state and causing the running jobs for influxdb logger to fail and not schedule. Once the hub and app gets in that bad state, it unfortunately cant recover without help. Once this happens i have found the best way to recover is to simply open the app and click done. That should trigger the app to do a check of the backlog and start to process the data. Sometimes if the backlog gets really big it can a good amount of time to process each batch initially. Just let it process and hopefully it will start to catch up.

That hub load message is beacuse once the backlog gets over 10k or so the cpu struggles to access the state value that holds the data.

I did that, and it did get things going ... once per "Done". I think because that exception was thrown on every call to setGlobalVar(), which meant the runIn() wasn't executed.

Unrelated, but I also learned 250 is too big a batch size, reducing it to 100 cleared the backlog much faster.

Finally, I found myself wishing for emergency tools on the app config page :

- a "pause logging" button (ignore new incoming events until unpaused)

- a "clear backlog" button