Hi Hubitat crew, I’ve been chewing on this for quite a long time, but without access to low level processes, it’s been impossible to prove with hard data.

So here are my long term observations and my thoughts on possible options to improve Hubitat responsiveness generally.

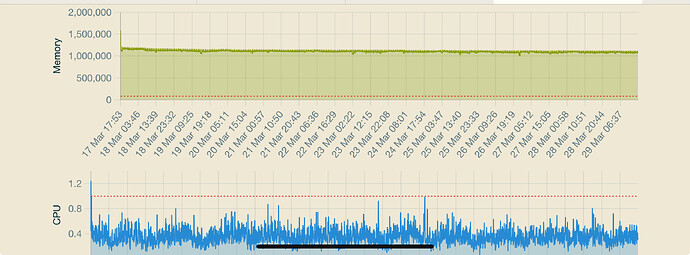

Problem Statement: I’ve found, that Despite not using even close to maximum CPU resources consistently, it’s possible for my Hubitat hubs to miss radio and hub mesh events.

I’ve noticed this since the C7 and can reproduce it on the C8 Pro too. The easiest way to do this is to connect something up, eg Home Assistant, to the Maker API and enable Post device events.

The most common scenario I found was missed zwave motion events. I would notice several per day at random times. I also noticed occasional automatons based on lights turning on failing to be triggered.

The 2nd scenario involved Hue lights connected via hub mesh (using the official hue integration) from my secondary hub. This was much less common tho.

Once I disconnected HA from my C8 Pro and deleted Hubitat Maker API, I haven’t had a single missed event in the last 2 days and the CPU usage patterns have barely changed.

Recommendation: I propose, and I realise i have zero knowledge of the Hubitat inner workings, that Hubitat Elevation uses the Quad Core SoC’s more strategically.

By this I mean assign a priority CPU core to the radios (if they are enabled), a priority CPU core to the HE operating system and then configure the remaining two cores to run user level processes.

Or choose a similar strategy that ensures the core OS and radios always have priority - like I said, I don’t know the current underlying thread prioritisation model.