This is the command I'm running here to back up the directory local, then you could set up a cron job on the NAS to pick up the file?

sudo zip -rq /PATH TO BACKUPS/node-red-pi4-backup-$(date +%Y-%m-%d).zip /PATH TO NODE RED/.node-red

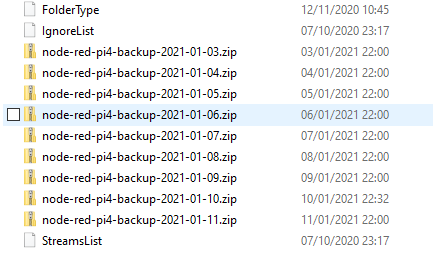

I'm then using Resilio sync to copy the backup to my PC

It keeps the last 7 days.

Full Flow, this will create the backup on the PI and then you just need to sort something to move it. Its set at 22:00 each day.

[{"id":"2d1a5950.d2c6e6","type":"tab","label":"Flow 1","disabled":false,"info":""},{"id":"aae1832f.83332","type":"exec","z":"2d1a5950.d2c6e6","command":"sudo zip -rq /**PATH TO BACKUPS**/node-red-pi4-backup-$(date +%Y-%m-%d).zip /**PATH TO NODE RED**/.node-red","addpay":false,"append":"","useSpawn":"false","timer":"","oldrc":false,"name":"backup .node-red","x":410,"y":160,"wires":[["e639f00f.06ee1"],["e639f00f.06ee1"],["70c0e369.8b18dc"]]},{"id":"2381e532.f66a0a","type":"inject","z":"2d1a5950.d2c6e6","name":"daily @2200","props":[{"p":"payload"},{"p":"topic","vt":"str"}],"repeat":"","crontab":"00 22 * * *","once":false,"onceDelay":0.1,"topic":"","payload":"","payloadType":"date","x":180,"y":160,"wires":[["aae1832f.83332"]]},{"id":"e73c88dc.d21868","type":"debug","z":"2d1a5950.d2c6e6","name":"backup-log","active":false,"tosidebar":true,"console":false,"tostatus":false,"complete":"payload","targetType":"msg","statusVal":"","statusType":"auto","x":990,"y":240,"wires":[]},{"id":"70c0e369.8b18dc","type":"switch","z":"2d1a5950.d2c6e6","name":"check op success","property":"payload.code","propertyType":"msg","rules":[{"t":"eq","v":"0","vt":"num"},{"t":"else"}],"checkall":"true","repair":false,"outputs":2,"x":230,"y":300,"wires":[["a272f3c.abf7a1"],["99b2ffc7.106ac"]]},{"id":"a272f3c.abf7a1","type":"exec","z":"2d1a5950.d2c6e6","command":"sudo find /**PATH TO BACKUPS**/*.zip -mtime +7 -type f -delete","addpay":false,"append":"","useSpawn":"false","timer":"","oldrc":false,"name":"delete older than 7 days","x":470,"y":260,"wires":[["e639f00f.06ee1"],["e639f00f.06ee1"],["14068310.122dad"]]},{"id":"99b2ffc7.106ac","type":"change","z":"2d1a5950.d2c6e6","name":"custom error msg","rules":[{"t":"set","p":"payload","pt":"msg","to":"❌ There was an error in Pi4 backup flow. Check logs.","tot":"str"}],"action":"","property":"","from":"","to":"","reg":false,"x":570,"y":380,"wires":[["e73c88dc.d21868","7ee45bd3.4948e4"]]},{"id":"14068310.122dad","type":"switch","z":"2d1a5950.d2c6e6","name":"check op success","property":"payload.code","propertyType":"msg","rules":[{"t":"eq","v":"0","vt":"num"},{"t":"else"}],"checkall":"true","repair":false,"outputs":2,"x":490,"y":320,"wires":[["71b9f688.7a0718"],["99b2ffc7.106ac"]]},{"id":"71b9f688.7a0718","type":"template","z":"2d1a5950.d2c6e6","name":"success msg","field":"payload","fieldType":"msg","format":"handlebars","syntax":"mustache","template":"✅ RPi4 Backup compelete.","output":"str","x":750,"y":240,"wires":[["e73c88dc.d21868","7ee45bd3.4948e4"]]},{"id":"e639f00f.06ee1","type":"switch","z":"2d1a5950.d2c6e6","name":"filter null","property":"payload","propertyType":"msg","rules":[{"t":"nnull"},{"t":"else"}],"checkall":"true","repair":false,"outputs":2,"x":660,"y":160,"wires":[["e73c88dc.d21868"],[]]},{"id":"7ee45bd3.4948e4","type":"pushover api","z":"2d1a5950.d2c6e6","keys":"54ad51a1.76db6","title":"","name":"","x":960,"y":380,"wires":[]},{"id":"54ad51a1.76db6","type":"pushover-keys","z":"","name":"Pushover"}]