this community continues to amaze me. really excellent work @john.hart3706 & @gparra, can't wait to give it a shot this afternoon.

Works. Excellent.

Glad to hear it!

Thanks to all of the instructions! I now have my Synology NAS pulling the backup every night!

How you did it? I have an Asustor but I have no idea how to make it to pull the backup

Synology has a task manager that runs user scripts. Does Asustor have that?

I have no idea but thanks for the info, probably I can install something similar or maybe it has it

Thanks.

More reason for mDNS support so I don't need to wire IP addresses into my scripts.

Hi All,

I modified my PowerShell script above (link) to add support for SSL which was added to HE since firmware 2.0.5.

Credentials are not sent in clear text anymore which is a lot better, certificate validation has to be disabled though due to the self-signed cert. If/when HE gives us the ability to upload our own cert this can be further improved...

Here is another linux variant for getting the db using curl and wget.

curl -c cookie.txt -d username=USERNAME -d password=PASSWORD http://HUB-IP/login

wget --load-cookies=cookie.txt --content-disposition -P /YOUR_HOME/hubitat-backups/ http://HUB-IP/hub/backupDB?fileName=latest

My cron looks like this.

First line runs the cron at 4am and makes sure I'm in ~/ then retrieves the cookie and finally the backup db.

00 4 * * * cd; curl -c cookie.txt -d username=USERNAME -d password=PASSWORD http://HUB-IP/login; wget --load-cookies=cookie.txt --content-disposition -P /home/USERNAME/Dropbox/hubitat-backups/ http://HUB-IP/hub/backupDB?fileName=latest

This line deletes backups older then 5 days.

@daily find /home/USERNAME/Dropbox/hubitat-backups/ -mindepth 1 -type f -mtime +5 -delete

Could this be done in python or node red? I have both running in dockers and would love to get regular backups working.

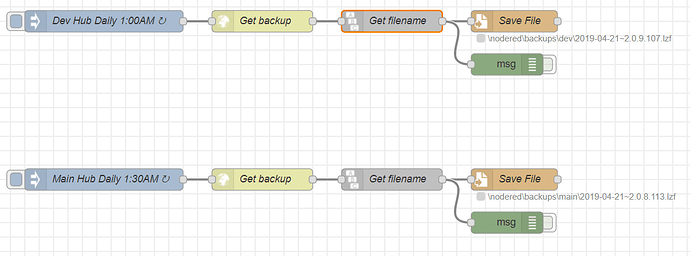

I use nodeRed for my daily backups.

The Get Backup node is an http request node performing a GET to

http://<hubip>/hub/backupDB?fileName=latest

and returning a binary buffer that is passed to the FileIn node to save to disk.

Can this work with a password? I assume that I could do reboots as well.

I would assume so but dealing with cookies is beyond my skillset.

@dan.t , @corerootedxb or @btk may possess the knowledge you require. I am but a mere fledgling in the ways of the Node.

See this thread for an awesome setup that @btk did. It does reboots and so much more. Of course, you would also need to include something to deal with passwords for this to work.

Is anyone having troubles with the backup download taking a long time? I am using the above mentioned url:

http://HUB-IP-ADDRESS/hub/backupDB?fileName=latest

I have 3 hubs and using NodeRed to download the latest backups every morning and one of my hubs is taking close to 3 minutes to present itself to NodeRed thus timing out. My other two hubs take less than 30 seconds. If I reboot this hub it works faster. This hub does have a larger backup because it is my "coordinator" hub.

From a size comparison:

Trouble Hub Backup Size: 10 MB

Hub 2 that works: 5 MB

Hub 3 that works: 4.4MB

I am testing the node-red-contrib-http-request-ucg node where I can set a timeout value to see if it will at least work.

@patrick any thoughts?

I just backed up 4 hubs in a minute or so...

#1 is 9.0mb

#2 is 7.7mb

#3 is 16.0mb

#4 is 1.2mb

using the same URL you are.

wow.. those backup file size are small.

My db is usually around 42 mb.

On my 3 hubs they are all 5-15 MB.

Maybe you have something excessively logging for some reason (or no reason at all)

@ritchierich: couldn't this be just a networking issue?