Should anyone want to do this in Windows with Curl

-

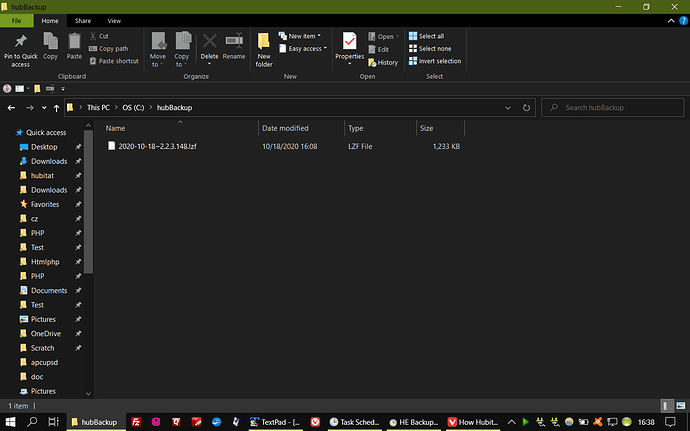

Create a directory C:/hubBackup (or any name you want) to store the backups

-

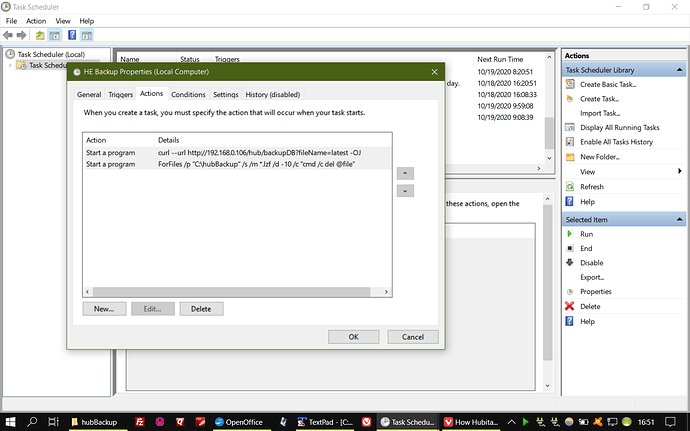

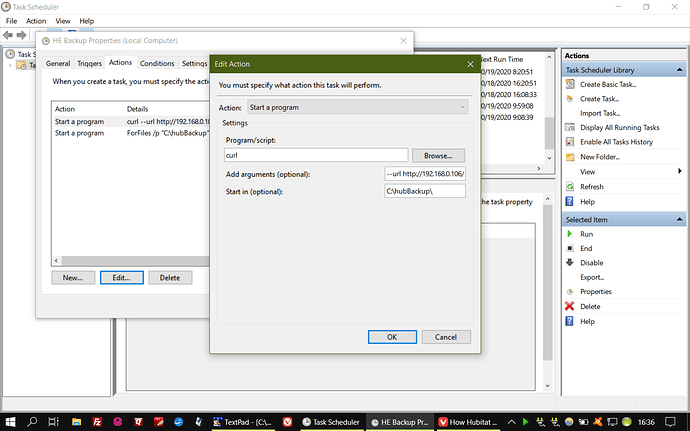

Create a daily scheduled task with the following action,filling the optional "start in" field with, C:/hubBackup/

curl --url http://HubIP/hub/backupDB?fileName=latest -OJ -

To delete backups over 10 days old

ForFiles /p "C:\hubBackup" /s /m *.lzf /d -10 /c "cmd /c del @file"

Note: matching files names are NOT overwritten