I am trying to bring my 5 Iris Motion Sensors from Hubitat to Google Home. Two of them I am able to access via the "What's the temperature of [device name]" voice command, but on the other three I get a response that the device has not been setup yet. I am able to see the temperatures just fine of all the sensors in HE.

While setting up this integration, I apparently broke the built-in Google Home app. When I try to add it to Google Home, I get a "Couldn't update your settings. Check your connection" error.

At some point I was using the wrong {app ID} in the Fulfillment URL (perhaps the one that was originally setup for the built-in Google Home app). Any chance I broke some authentication setting? Is there any way to reset that?

I had that happen as well. I don't remember for sure, but I think I had to go to my Google account and remove a phantom linked Hubitat account. You can check that here: https://myaccount.google.com/accountlinking.

When I select the Video Camera Capability in the Community Google Home app in Hubitat, the virtual device I created with the driver doesn't show up. The list of selectable devices is blank.

I had to add the Actuator capability to the driver and use that in the app in order to get the device to show up on the list.

Also, the only thing I get on the display is text saying "Smart Home Camera". I know that the feed is viewable on Google devices since I am casting the stream to the display using CATT currently. So, what could possibly be causing the camera to not display?

For some reason Hubitat uses capability.videoCapture as the device selector for both its Video Camera and Video Capture device types, and I didn't notice that when building the mapping in this app. I guess its a bug, but really, why would Hubitat do that? You can work around it by selecting "Video Capture" as the device type for your camera device type.

Google is very picky about codecs and streaming protocols. CATT is probably doing some conversion. This app will not do any conversion. It just hands off a URL to Google. If that URL doesn't point to something the device can handle then it won't display.

The video is MJPG....so I guess I'll stick with the method I've been using.

Yes, that fixed it. Thanks.

I figured out a workaround for this: Instead of using device type "sensor" I use "thermostat". Now it works.

Google doesn't support MJPG; it needs a h.264 + AAC stream unfortunately. Using Blue Iris, the only output I was able to get working is HLS (m3u8).

As mbudnek mentioned, CATT would be doing some transcoding on the fly to get you the required target format.

CATT uses the Google Cast functionality which supports more formats than just h.264. CATT doesn't transcode. It runs on a Raspberry Pi Zero and there is no lagging in the video.

MJPEG is just a stream of static JPG images, so it needs to be transcoded into some compatible container for Google Nest.

From your link above, this is all the nest hub supports:

Google Nest Hub

- H.264 High Profile up to level 4.1 (720p/60fps)

- VP9 up to level 4.0 (720p/60fps)

What are you running on your pi for CATT? Interested in playing with that. If I use Google Assistant Relay, I can CATT my MJPEG output with zero lag and zero latency, but it doesn't link back to the google devices.

What do you mean? You can't get it to play on your Google devices? Or it doesn't show up on your list of Google Devices? No, it doesn't...because it's not a Google Device. But the only thing that creating a Google device gets you is ability to issue a voice command to see the camera. Which is very easily accomplished with a virtual switch and a Google Assistant Routine. Why burn all that energy recoding the video? Also, I want the camera feed to show up automatically. Not possible when it's a Google Device. You can only turn the feed on by your voice.

I don't understand your question. CATT runs in python. You can control it with AR or you can use something like Node-Red if you wanted to. Or you could use the CATT Director driver/app that a user developed that is linked here on the forum.

No, that isn't the only thing you get. You can bring up the cameras from any nest device by just tapping on the screen as well as using your voice. If you want to force a camera to a display on a trigger, then you would be better off using Google Assistant Relay or another CATT solution since GAR would be required to call up this device anyways (might as well bypass the middleman), and you get a zero latency stream as you have mentioned. I am fully aware that a CATT stream isn't a google device, so of course it won't show up in google home.

The virtual switch route only works if you have a small google deployment. Our home has 8 nest hubs, 2 chromecast TV's + 9 cameras. With your virtual switch route, I would need to make 90 virtual switches in order to map each permutation. That's not a realistic solution for me to provide access to each feed from any device.

No, you wouldn't. With IFTTT you can issue Google Assistant commands using a simple word phrase. Combined with Rule machine (or a custom app) you can pass that phrase to Hubitat. So, you would only need 10 devices, one for each display or 9 (one for each cam) and use the other side to use as the phrase.

And as it is now, you still need 9 virtual devices. One for each camera. But of course, that is only if you want to use voice command from every single device. Maybe you don't want your kids to be able to see the cam in your bedroom.

Also, I wouldn't call your Google Deployment large....I would call it HUMONGOUS! 8 Nest Hubs? Considering most households who have a Google display only have one. I have 2 displays 2 speakers and 3 Chromecasts and I would consider mine rather on the larger side.

Also, having 8 Cameras? If you're up to that large of a system I would think you;d want to have an always on display with all the feeds all the time. I mean, why else have that many cameras. Are you expecting an invasion or something?

Not sure how you came up with that. I don't know anyone that only has one. They all have at least two.

For displays I have 3 hubs, 1 max and 2 Leveno smart clocks. Then throw in 6 or so mini's, 2 Nest cams and the Nest Hello doorbell. Very easy to end up with multiple devices.

Can't stream to those.

not displays

Also 10 is a lot more than 2. It's 5x as many actually. ![]()

Never said it wasn't.

5x fewer than who I was responding to.

It is my opinion that his setup is a lot larger than the average user. You can disagree with that if you want but you can't say that my opinion is wrong. It's my opinion. You think the average user has 10 cameras in their home?

Again....someome doing nothing but stirring the pot for absolutely no reason. This forum is ridiculous. You people need to learn that not every post requires you to respond.

Market research.

If there are 157 million speakers and only 60 million users, that means that there are barely enough for every user to have 2. And speakers with a display is a subset of that.

Wow, someone is in a bad mood today. So I guess your the only one that can have an opinion? Take a deep breath and maybe you won't take everything so personal.

I won't respond to any of your posts again. Happy?!

Have a great day!

Maybe johnwick could take a page from his own playbook and not post a negative troll response to everyone elses' posts? Like geebus man, if you don't want to use this trait, don't. Nobody is forcing you....

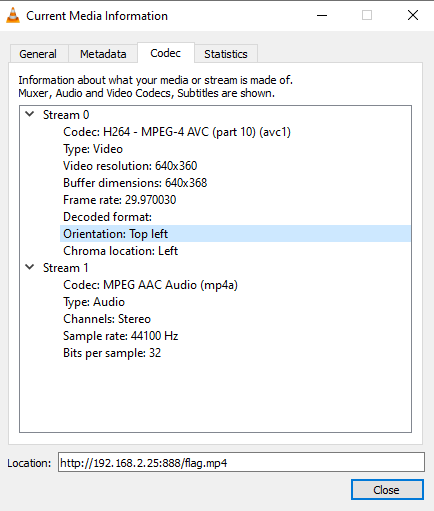

Back on topic, I'm interested in hearing what others are using as a stream for content for this camera trait. If you use the generic driver that was committed, you can stream a MP4 off a webserver (needs to be a URL though, not just a drive location). Here is a screen snip from VLC to show what the compatible codecs look like:

I'm using my Blue Iris outout HLS/m3u8 stream. I am seeing that when I first call a camera up, it wants to start playing, puts up a buffering overlay and then resumes. Pause/playing the video fixes this until about 48 seconds (HLS=3 seconds x 16 video files per block), and then it needs to buffer again. I've opened a ticked with Blue Iris support to see what is up with the encoder. I tried that stream using a clappr test website, and same thing happens, so it is definitely on the Blue Iris side.

Looking for other success stories with this.

Just a FYI, you can make a reliable stream using ffmpeg from a RTSP stream:

ffmpeg -rtsp_transport tcp -i "RTSP source" -vcodec libx264 -c:a aac -b:a 128k -hls_time 3 -hls_wrap 10 "c:\webserver\streaming.m3u8"

where:

"RTSP source": RTSP camera source

"c:\webserver\streaming.m3u8": is the folder/name on a webserver